How to use GraphRAG in the ArangoDB Platform web interface

Learn how to create, configure, and run a full GraphRAG workflow in four steps using the Platform web interface

ArangoDB Platform

The GraphRAG workflow in the web interface

The entire process is organized into sequential steps within a Project:

- Creating the importer service

- Uploading your file and exploring the generated Knowledge Graph

- Creating the retriever service

- Chatting with your Knowledge Graph

Create a GraphRAG project

To create a new GraphRAG project using the ArangoDB Platform web interface, follow these steps:

- From the left-hand sidebar, select the database where you want to create the project.

- In the left-hand sidebar, click GenAI Suite to open the GraphRAG project management interface, then click Run GraphRAG.

- In the GraphRAG projects view, click Add new project.

- The Create GraphRAG project modal opens. Enter a Name and optionally a description for your project.

- Click the Create project button to finalize the creation.

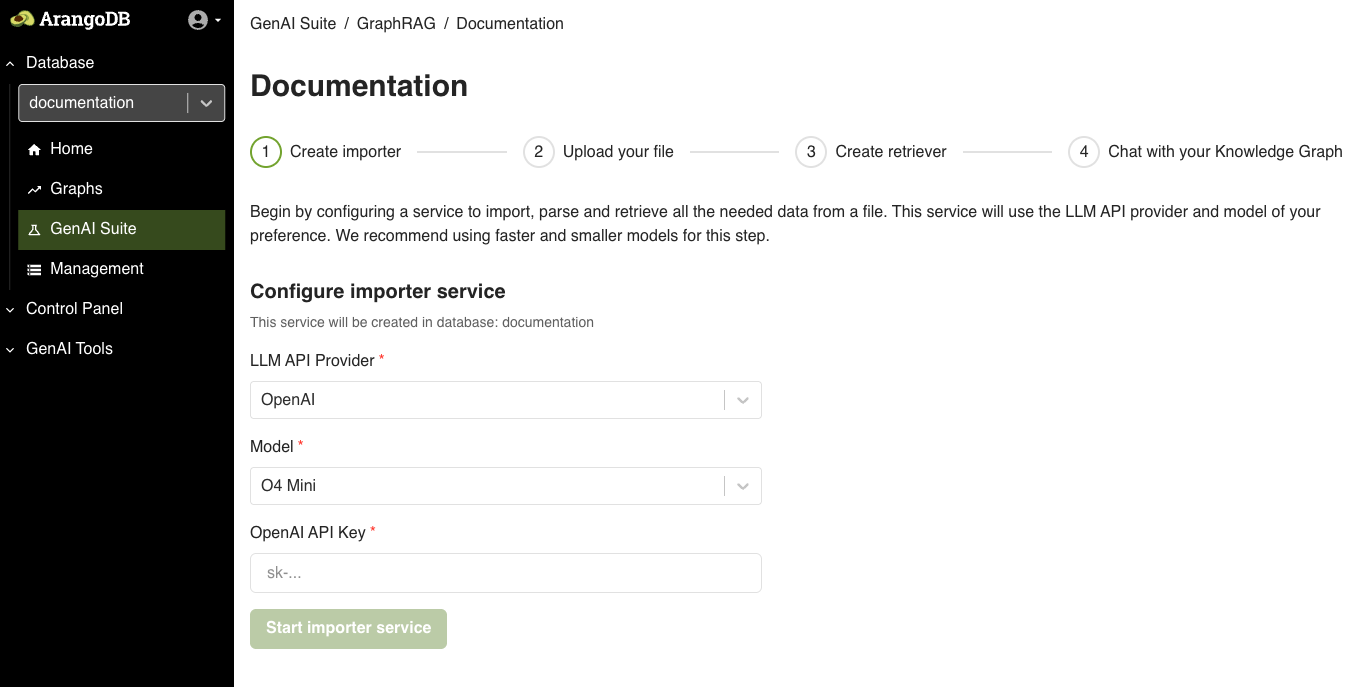

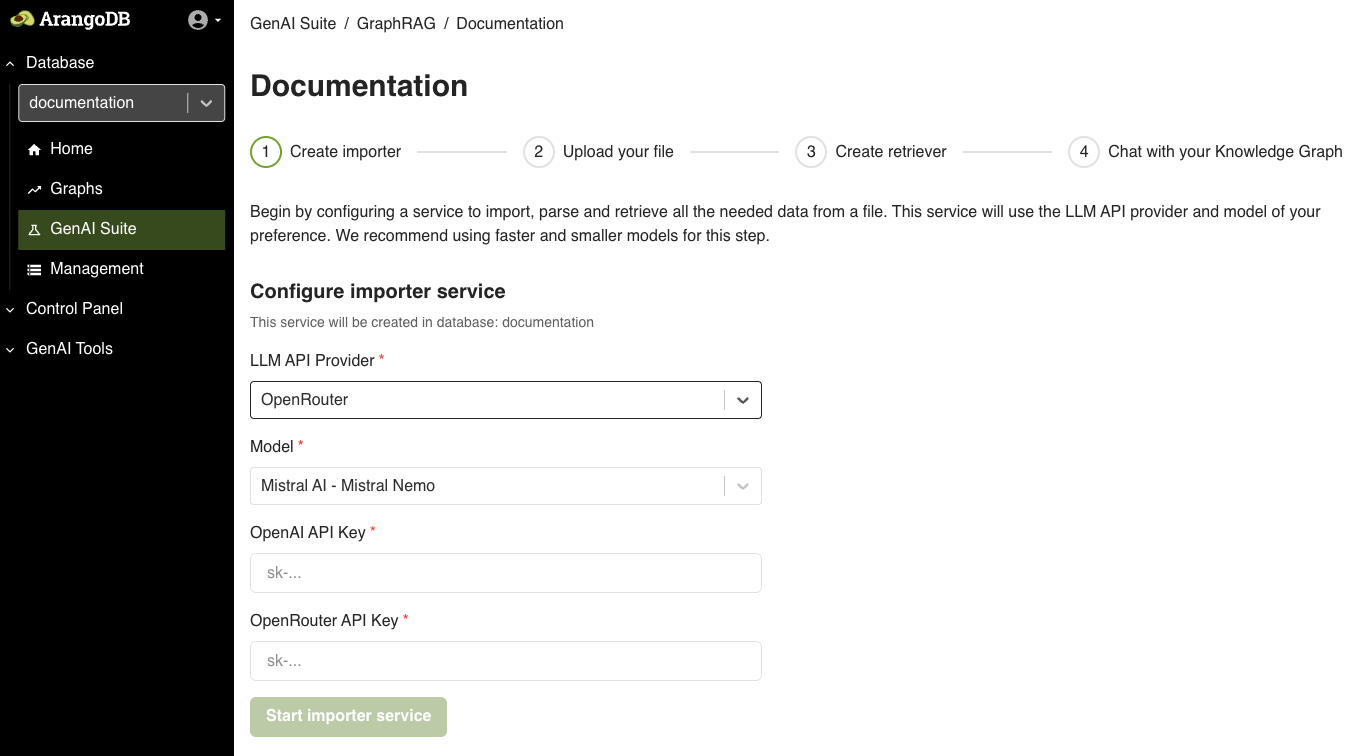

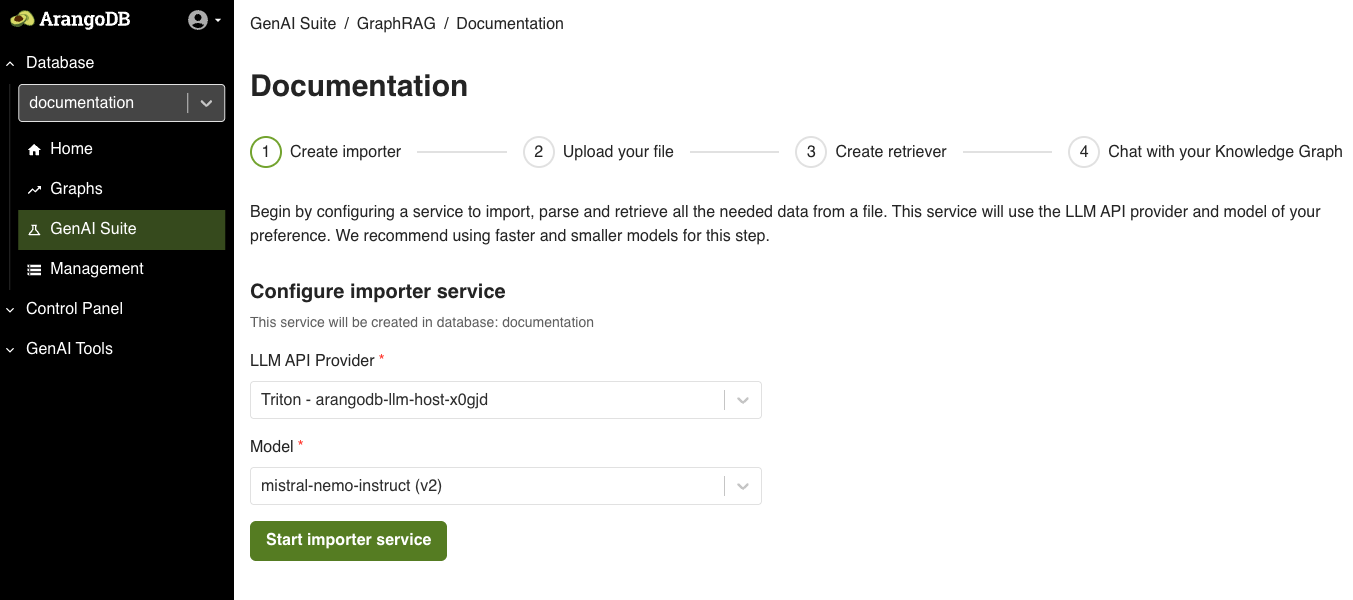

Configure the Importer service

Configure a service to import, parse, and retrieve all the needed data from a file. This service uses the LLM API provider and model of your choice.

After clicking on a project name, you are taken to a screen where you can configure and start a new importer service job. Follow the steps below.

- Select OpenAI from the LLM API Provider dropdown menu.

- Select the model you want to use from the Model dropdown menu. By default, the service is using O4 Mini.

- Enter your OpenAI API Key.

- Click the Start importer service button.

- Select OpenRouter from the LLM API Provider dropdown menu.

- Select the model you want to use from the Model dropdown menu. By default, the service is using Mistral AI - Mistral Nemo.

- Enter your OpenAI API Key.

- Enter your OpenRouter API Key.

- Click the Start importer service button.

- Select Triton from the LLM API Provider dropdown menu.

- Select the Triton model you want to use from the Model dropdown menu.

- Click the Start importer service button.

See also the GraphRAG Importer service documentation.

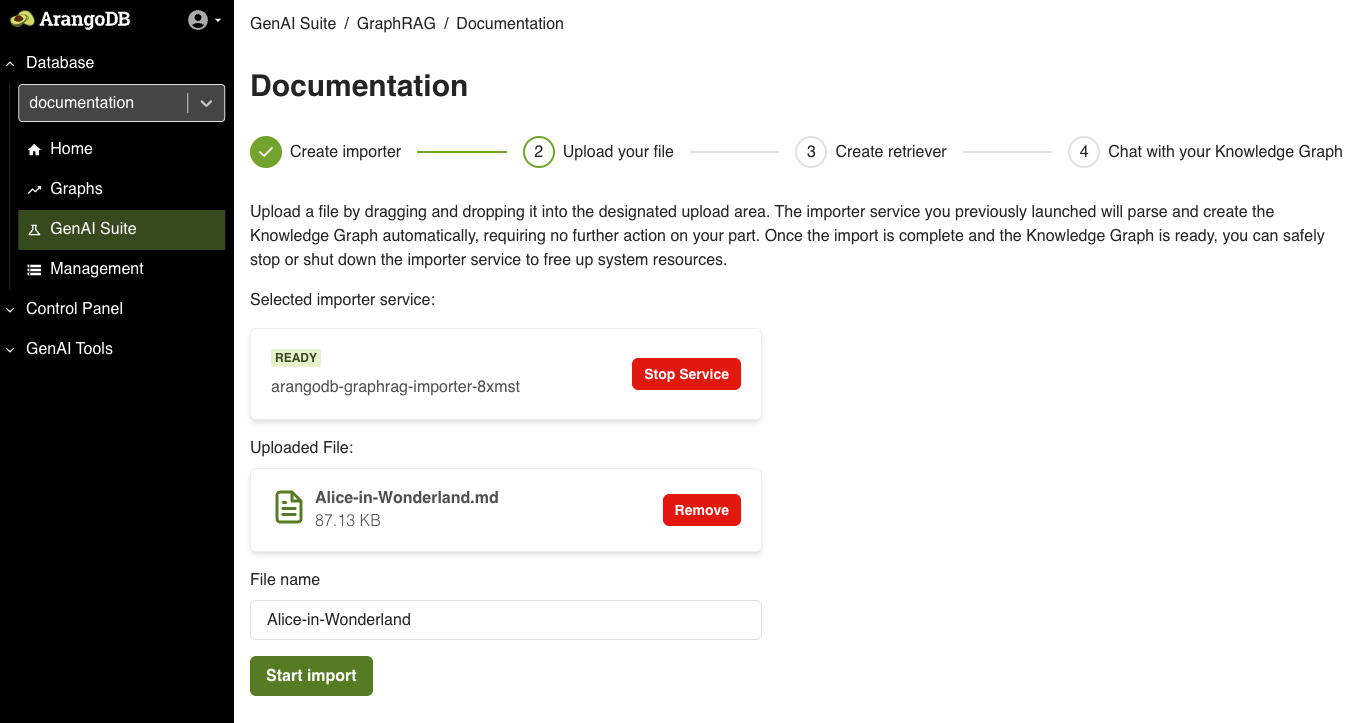

Upload your file

- Upload a file by dragging and dropping it in the designated upload area. The importer service you previously launched parses and creates the Knowledge Graph automatically.

- Enter a file name.

- Click the Start import button.

.md or .txt format.

Explore the Knowledge Graph

You can open and explore the Knowledge Graph that has been generated by clicking on the Explore in visualizer button.

For more information, see the Graph Visualizer documentation.

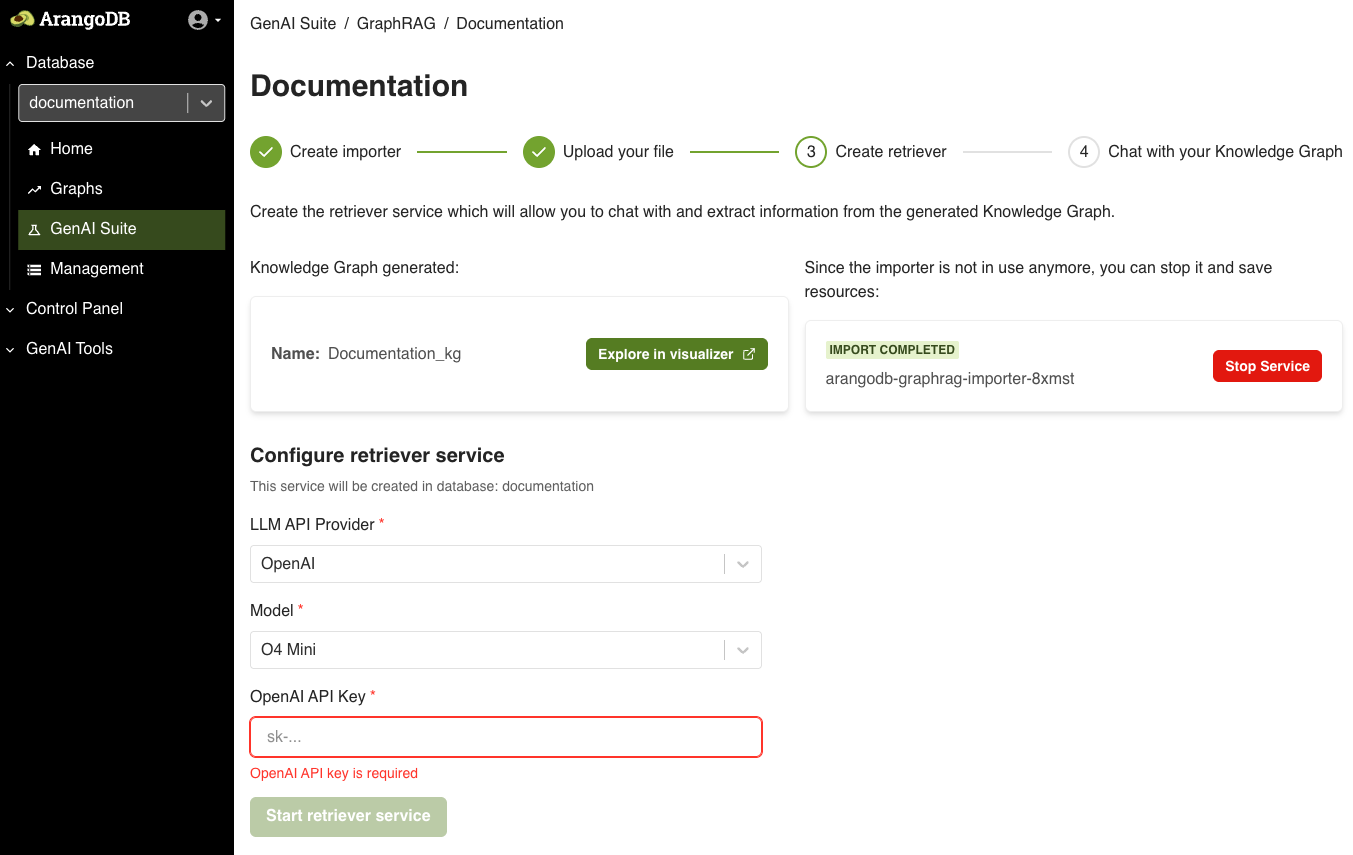

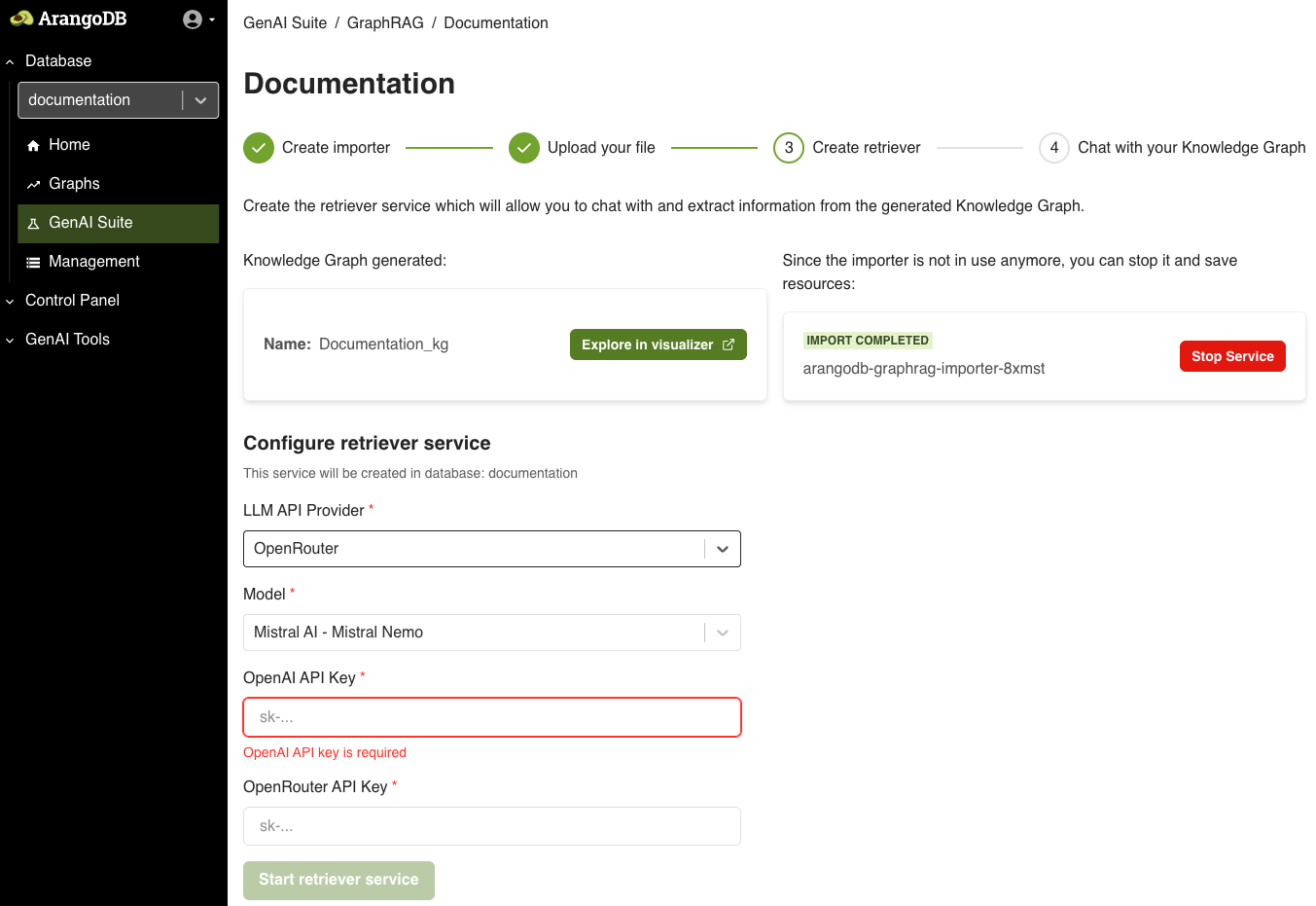

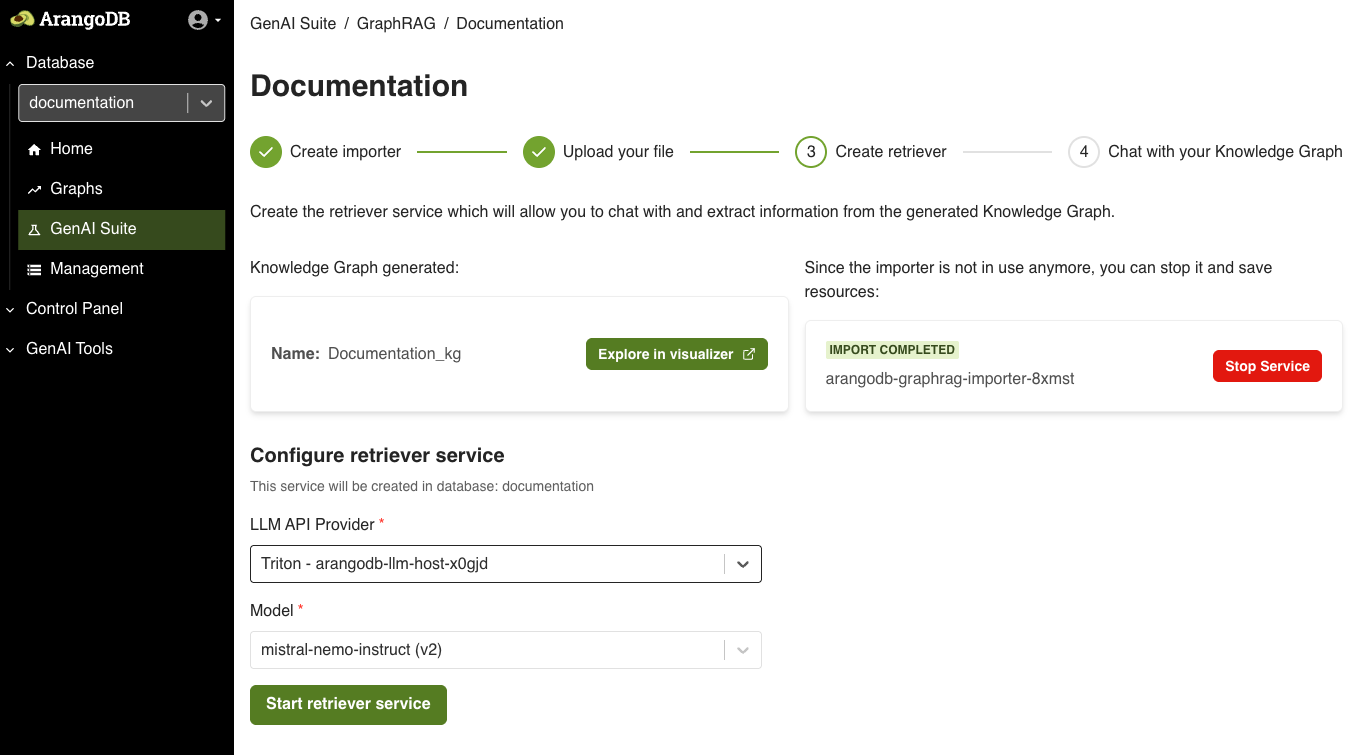

Configure the Retriever service

Creating the retriever service allows you to extract information from the generated Knowledge Graph. Follow the steps below to configure the service.

- Select OpenAI from the LLM API Provider dropdown menu.

- Select the model you want to use from the Model dropdown menu. By default, the service uses O4 Mini.

- Enter your OpenAI API Key.

- Click the Start retriever service button.

- Select OpenRouter from the LLM API Provider dropdown menu.

- Select the model you want to use from the Model dropdown menu. By default, the service uses Mistral AI - Mistral Nemo.

- Enter your OpenRouter API Key.

- Click the Start retriever service button.

- Select Triton from the LLM API Provider dropdown menu.

- Select the Triton model you want to use from the Model dropdown menu.

- Click the Start retriever service button.

See also the GraphRAG Retriever documentation.

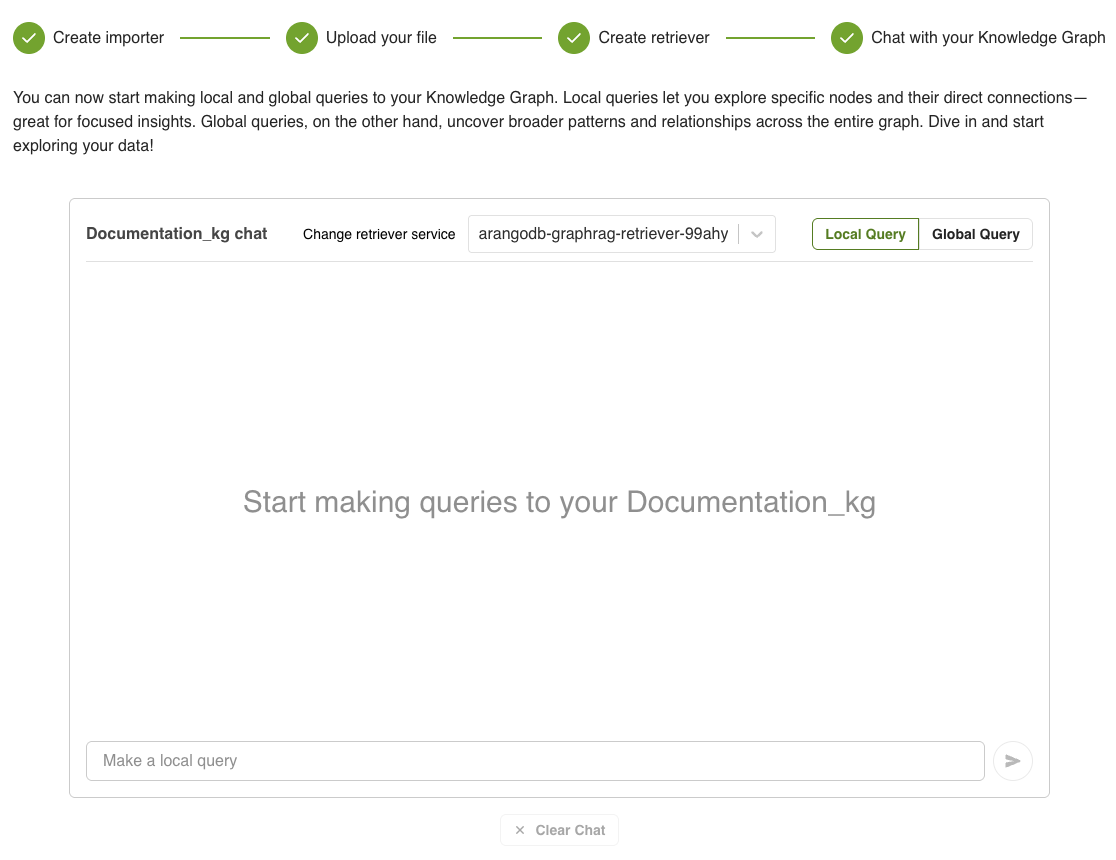

Chat with your Knowledge Graph

The Retriever service provides two search methods:

- Local search: Local queries let you explore specific nodes and their direct connections.

- Global search: Global queries uncover broader patters and relationships across the entire Knowledge Graph.

In addition to querying the Knowledge Graph, the chat service allows you to do the following:

- Switch the search method from Local Query to Global Query and vice-versa directly in the chat

- Change the retriever service

- Clear the chat

- Integrate the Knowledge Graph chat service into your own applications